2 January 2025

Ahmedabad University Doctoral Student Develop AI-assisted Model for Early Epilepsy Detection

Epilepsy, a neurological disorder marked by seizures, shows abnormal patterns in electroencephalogram (EEG) signals utilised by clinicians for diagnosis. Yet, the inherent subjectivity of manual EEG analysis, coupled with the ambiguity of artificial intelligence (AI) detection, creates a diagnostic conundrum. AI, in its current form, lacks the element of explainability, leaving clinicians hesitant to trust its judgments as they must understand the reasons behind AI's decisions.

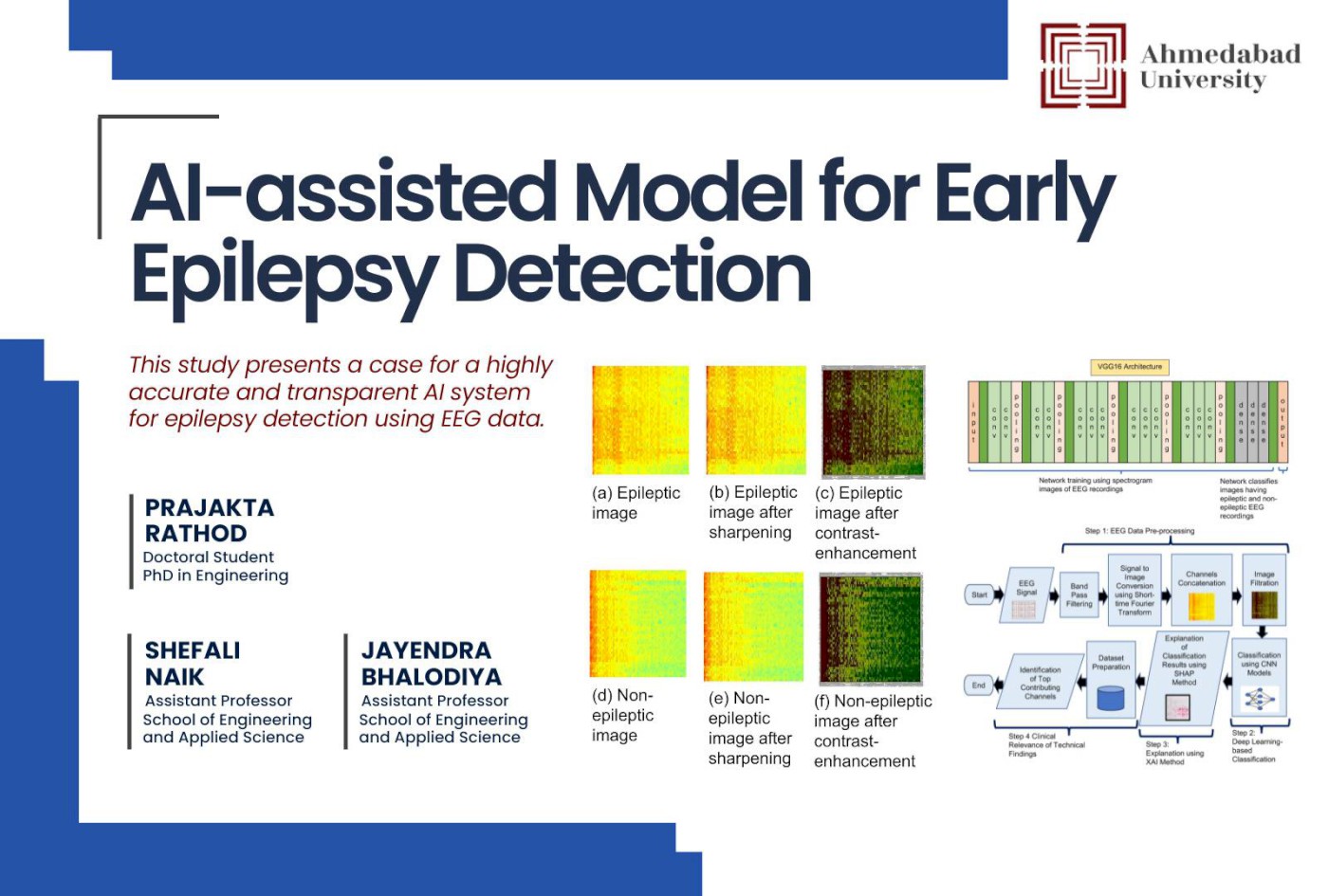

Ahmedabad University’s PhD student, Prajakta Rathod, supervised by Professor Jayendra Bhalodiya, and supported by Professor Shefali Naik developed an Explainable AI (XAI)-based methodology to add clarity to diagnosis. This method enhances the accuracy of epileptic and non-epileptic patient classification whilst simultaneously providing interpretable results that medical professionals can readily capture.

Their study presents a case for a transparent AI system for epilepsy detection using EEG data. By ingeniously combining deep learning, specifically Convolutional Neural Networks (CNNs), with the explanatory power of SHAP (Shapley Additive exPlanations), the model effectively classifies patients and gives clear, justifiable reasoning for its conclusions. This holds the potential to revolutionise epilepsy diagnostics using AI and support neurologists in monitoring epilepsy patients. The methodology comprises four stages.

EEG signals are preprocessed, transformed into spectrogram images—visual representations of signal frequencies over time—and enhanced through detailed filtering.

An AI-based classification is undertaken, employing three distinct CNNs: Densenet121, Resnet18, and VGG16. Each CNN predicts patient status based on EEG spectrograms.

The SHAP method is applied to elucidate the AI's classification decisions, identifying important pixels within the spectrogram images.

The model identifies the EEG channels that contributed most significantly to each classification, aiding neurologists in focusing on specific brain regions implicated in epilepsy.

The classification accuracy rates for VGG16, Resnet18, and Densenet121 were highly favourable, registering at 97.55 per cent, 95 per cent, and 91.29 per cent, respectively. Furthermore, in terms of explainability, Densenet121 + SHAP demonstrated the most robust performance, followed by Resnet18 + SHAP and VGG16 + SHAP.

The study reveals that VGG16 excels in accuracy, while Densenet121, when paired with SHAP, offers the most lucid explanations. This XAI approach, coupled with the model's high accuracy, renders it a reliable tool for automated epilepsy detection, fostering trust and usability in clinical settings.

Published in the journal "Neural Computing and Applications," under the title "Epileptic signal classification using convolutional neural network and Shapley additive explainable artificial intelligence method," this research offers an invaluable asset to neurologists and EEG technologists. It predicts a future of faster, more objective epilepsy diagnosis, empowering human efforts and mitigating the impact of human bias.

Through a robust research culture, Ahmedabad University empowers professors and students to conduct impactful research, aligning with its commitment to excellence and meaningful societal contributions.